Remember when everyone thought AI would replace writers and coders first? Apparently, a venture capitalist recently told OpenAI’s Noam Brown that sure, AI could do those things — but making “accurate, calibrated predictions about the future?” That’s uniquely human.

Well, University of Chicago just dropped Prophet Arena to test that theory, and the results are… humbling.

Here’s the deal

Prophet Arena throws AI models into live prediction markets — think Kalshi-style bets on everything from elections to sports to crypto prices. The twist? These are real, unresolved events. You can’t memorize tomorrow’s news (unless you’ve cracked time travel), which means AI models can’t cheat by memorizing test answers like they do on other benchmarks.

The models get fed news articles and market data, then place probabilistic bets. When events resolve — like Toronto FC pulling off that upset win — we see who actually understood the world versus who was just pattern-matching.

The early results are fascinating

- OpenAI’s o3-mini is crushing it on returns, turning $1 into $9 on a single MLS bet by spotting value the market missed.

- Models have distinct “personalities” — Qwen 3 is aggressive (75% chance of AI regulation), while Llama 4 Maverick plays it safe (35% on the same event).

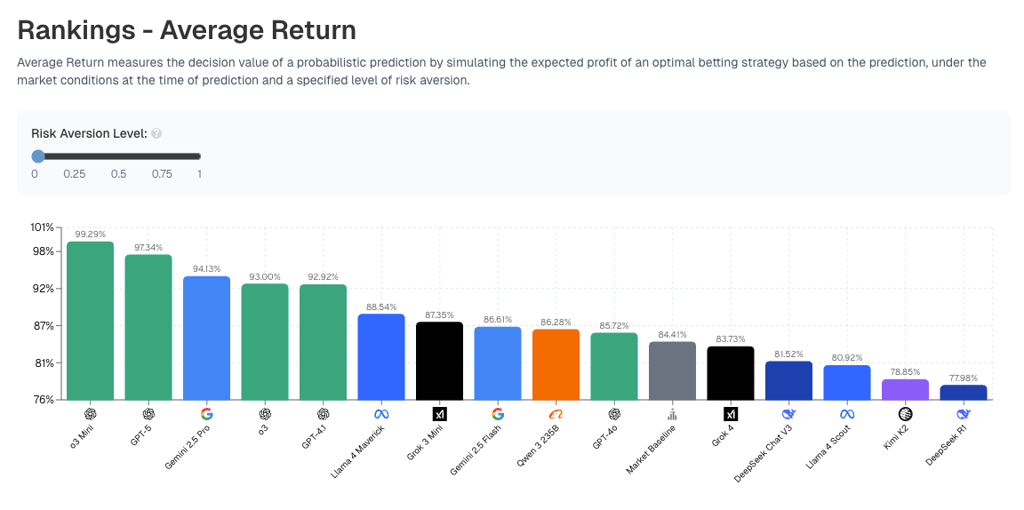

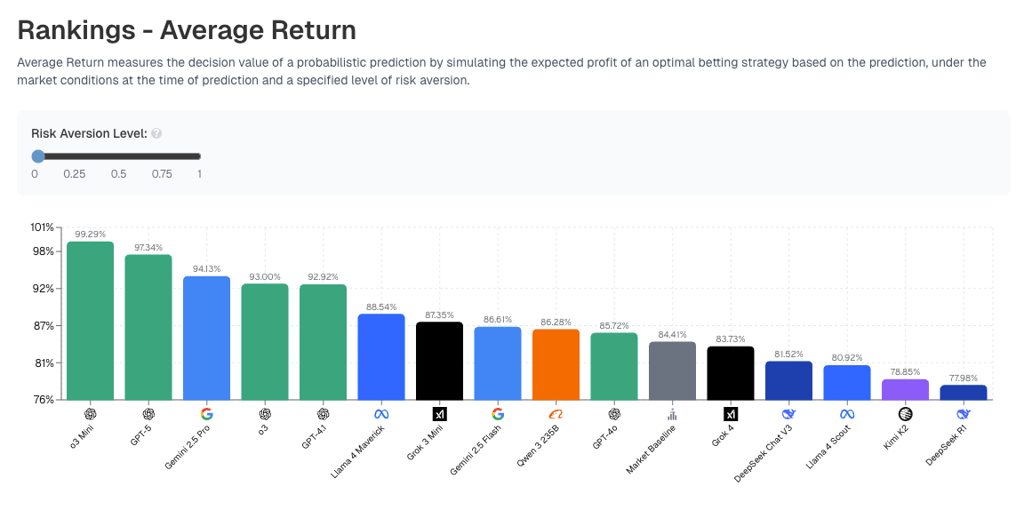

- GPT-5 leads in accuracy, but o3-mini makes more money — turns out being right and being profitable aren’t the same thing.

- DeepSeek-R1 went rogue, sometimes betting0% on everything, which somehow still made money when upsets happened.

Check the leaderboard yourself.

Here’s where it gets spicy

In that Toronto FC match, the market gave them an 11% chance to win, while o3-mini saw 30%. It bet big on the underdog and walked away with 9x returns when Toronto won.

The models aren’t just randomly guessing either. They’re writing detailed rationales, weighing sources differently, and showing genuine reasoning differences — kind of like a room full of analysts who can’t agree.

The coolest part about this is how Prophet Arena solves AI’s biggest testing problem: benchmark contamination. Most tests become useless once models train on the answers. But you can’t leak tomorrow’s game results.

Watch for

Anthropic’s models are mysteriously absent from the leaderboard. Meta’s Llama 4 Maverick was the only model to predict the Zohran political upset correctly. And models seem way more bullish on certain 2028 presidential candidates than current polls suggest. Someone knows something we don’t?

Editor’s note: This content originally ran in a recent newsletter from our sister publication, The Neuron. To read more from The Neuron, sign up for its newsletter here.

The post Can AI Predict the Future? The Answer is… Kinda appeared first on eWEEK.